Mind Map–Driven Testing: Charting the Untamed Terrain of Software Quality

Introduction

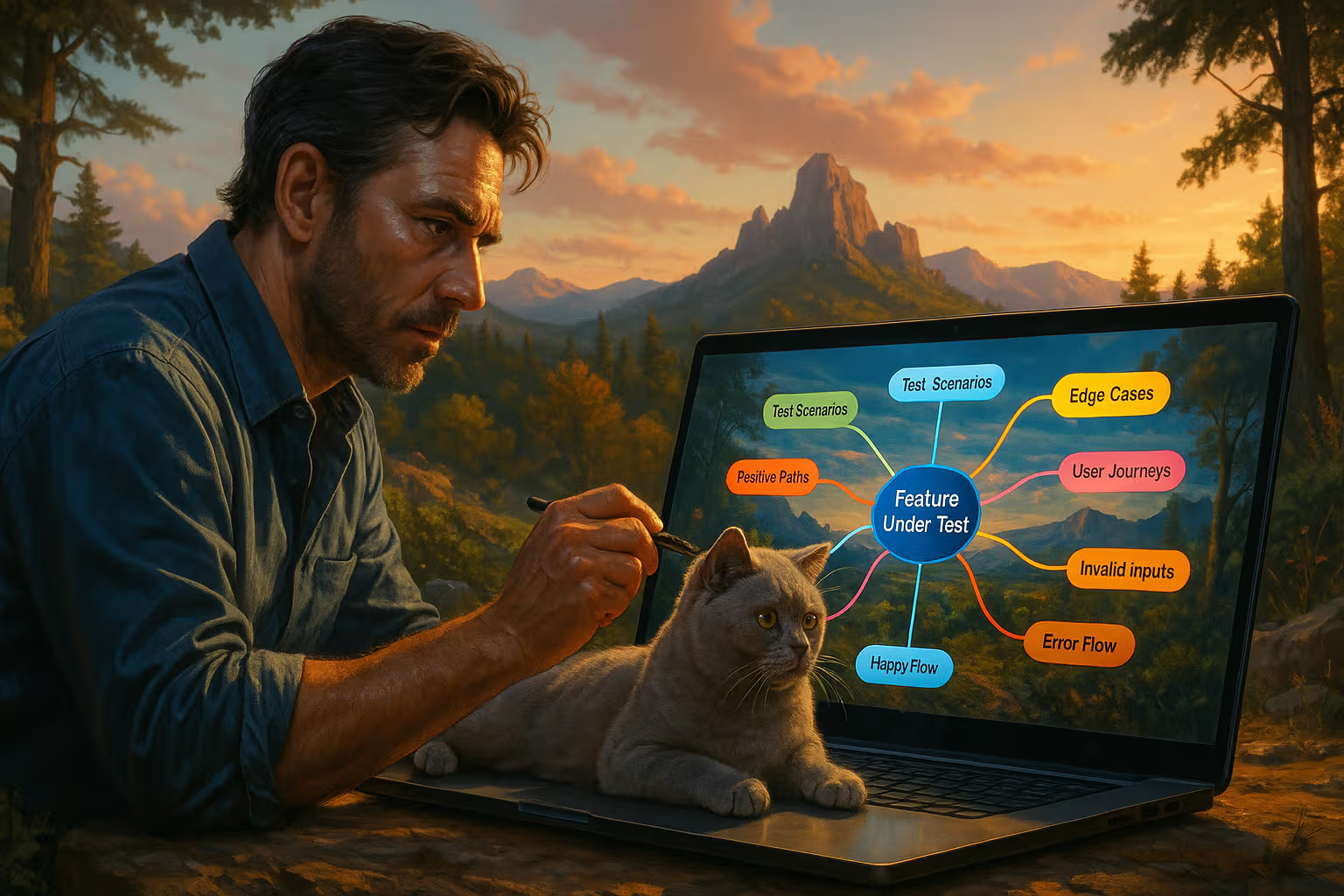

Exploratory testing often feels like wandering a vast, unmapped wilderness—rich with hidden faults but daunting in its sheer scale. Mind map–driven testing aims to tame that wilderness by turning abstract ideas into visual branches, guiding your QA journey with clarity and purpose. In this review, we delve into the InfoQ article on mind map–driven testing, reflecting on its core principles, practical tips, and the charming influence of a British lilac cat who insists on pawing at every idea node. Let’s embark on this creative trek together, armed with digital sticky notes and feline curiosity.

graph TD

A[Feature Under Test] --> B(Test Scenarios)

A --> C(Edge Cases)

A --> D(User Journeys)

B --> E[Positive Paths]

B --> F[Negative Paths]

C --> G[Boundary Conditions]

C --> H[Invalid Inputs]

D --> I[Happy Flow]

D --> J[Error Flow]The Art of Mind Map–Driven Testing

Mind map–driven testing starts with a central concept—often the feature under test—and radiates out into branches representing test scenarios, edge cases, and user journeys. This approach contrasts with traditional test suites that rigidly enumerate steps; instead, mind maps encourage serendipitous discoveries as testers follow organic connections. Picture a tree whose roots are stakeholders’ requirements and whose branches are colored by risk, priority, or complexity. Each offshoot might reveal a hidden bug or an unanticipated user behavior waiting to be examined.

Beyond structure, mind mapping fosters creativity. When my British lilac cat, Mr. Whiskers, strolls across the keyboard, he adds rogue nodes like "cat hair in API payload"—which, surprisingly, isn’t far from real-world data corruption issues in text processing modules. By celebrating such playful randomness, teams can unearth test ideas that formal documents might overlook. The InfoQ article emphasizes this playful yet disciplined spirit: mind maps are both canvases for creativity and frameworks for systematic coverage.

A key strength of mind map–driven testing lies in collaboration. Modern QA rarely happens in isolation; developers, product owners, and QA engineers all bring unique perspectives. Exporting mind maps to shared tools like XMind or MindMeister turns solo scribbles into living diagrams. Teams can collectively add branches, comment on nodes, and vote on high-risk areas. This social dimension aligns perfectly with agile philosophies, ensuring that testing stays visible, adaptive, and inclusive.

Tools and Techniques

Selecting the right tool is half the battle. While pen-and-paper mind maps can spark initial ideas during whiteboard sessions, digital tools provide scalability and traceability. InfoQ’s author highlights options from free, open-source solutions like FreeMind to enterprise-grade platforms such as MindManager. Integration with test management systems (e.g., Jira, Zephyr) is crucial: you want nodes to translate directly into test cases or tickets, avoiding duplication of effort and ensuring that every branch is actionable.

flowchart LR

subgraph Tool Integration

A[Mind Map Tool] --> B[Jira API]

A --> C[Zephyr]

B --> D[Test Cases]

C --> D

endStyles and conventions matter, too. Color-coding branches by severity or feature area helps testers quickly focus on critical paths. Icons or emojis can denote special conditions—think ⚠️ for high-risk, 💡 for innovative scenarios, or 🐞 for known bugs. My own recommendation: reserve a distinctive marker for "Mr. Whiskers’ wild cards," reminding the team to explore the absurd as well as the plausible. After all, what seems absurd today may uncover tomorrow’s critical defect.

Linking mind map nodes to automation scripts bridges the gap between manual exploration and regression testing. In one example, a node labeled "payment flow – negative amounts" hooks into a Selenium test suite that iterates over a matrix of invalid inputs. The visual map thus becomes both blueprint and execution plan. The InfoQ article provides code snippets showing how to export mind maps in XML for automated parsing—an elegant demonstration of the hybrid manual–automation approach.

Integrating with Agile Workflows

Agile sprints thrive on short feedback loops, and mind maps can accelerate those loops. At sprint planning, product owners sketch high-level feature maps; by the end of the sprint, QA engineers refine branches into executable tests. This continuous evolution mirrors the agile principle of welcome changing requirements, allowing mind maps to morph as user stories grow or pivot. When stakeholders add new acceptance criteria mid-sprint, simply attach a new branch and notify the team—no need to rewrite entire test plans.

sequenceDiagram

participant PO as Product Owner

participant QA as QA Engineer

participant Dev as Developer

PO->>QA: Add acceptance criteria

QA->>Map: Update branches

QA->>Dev: Flag new test nodes

Dev-->>QA: Confirm fixesDaily standups can incorporate mind map walkthroughs. Instead of asking, “What did you test yesterday?” testers can point at nodes: “I explored the login error flows and discovered a session timeout edge case.” Teammates instantly grasp coverage without poring over cryptic test IDs. The result: heightened transparency and improved collaboration, as developers see firsthand where QA is focusing—and can offer immediate bug fixes or clarifications.

Retrospectives, too, benefit from mind maps. Teams can annotate the map with post-mortem markers, noting which branches led to the most defects or which sections were neglected. Over time, a historical record emerges, guiding future test sessions. Mind maps thus become living artifacts that tell the story of your product’s quality journey.

Human Factors and Collaboration

Despite digital tools, mind mapping remains a highly human endeavor. The physical act of dragging, dropping, and linking ideas engages different cognitive pathways than filling forms or writing static documents. Mr. Whiskers often demonstrates this by pawing at the screen, prompting me to reconsider a test branch. Such serendipity underscores that quality isn’t purely mechanical; it’s a blend of logic, creativity, and the occasional feline intervention.

Pair testing sessions shine when one tester drives and another annotations the map. This buddy-system approach reduces tunnel vision and fosters cross-pollination of ideas. When testers swap roles, the map evolves organically—new nodes sprout, some branches merge, and previously hidden paths surface. InfoQ’s author notes that pairing accelerates knowledge transfer, empowering junior testers to learn heuristics directly from seasoned QA veterans.

Gesture-based mind mapping—using tablets or large interactive screens—adds another layer of engagement. Teams can literally sketch out ideas in the air, erasing and redrawing branches with intuitive motions. Although not covered extensively in the InfoQ article, emerging tools support VR mind mapping, where testers navigate 3D node forests. While we’re not quite at full immersion yet, these signals hint at future possibilities where QA becomes an experiential exploration rather than a checklist.

Generative Engine Optimization

As AI permeates every facet of software development, testing benefits from intelligent suggestion engines. “Generative Engine Optimization” refers to the practice of using AI to propose new branches or test data within mind maps. By analyzing past defects, code changes, and usage logs, the AI engine can recommend high-value test scenarios automatically—effectively amplifying human creativity with data-driven insights.

sequenceDiagram

participant QA as QA Engineer

participant AI as Generative Engine

participant Map as Mind Map Tool

QA->>Map: Create initial nodes

Map->>AI: Export context

AI-->>Map: Suggest new branches

QA->>Map: Accept or refine suggestionsImagine a plugin that observes your mind map as you draw and suggests edge cases you might miss: locale-specific date formats, accessibility boundary conditions, or API payload fuzzing patterns. The generative engine learns from each iteration, refining its suggestions based on which branches yield real defects. Over time, your mind map becomes co-authored by both the QA team and an ever-learning AI companion.

In practice, implementing generative engine optimization involves bridging mind mapping tools with machine learning services. Simple setups use REST APIs: export your map, send it to an AI service, and ingest the returned nodes back into the map. Advanced integrations leverage on-premise data lakes, ensuring that corporate privacy and security policies remain intact. The result is a hybrid workflow where human intuition and AI horsepower collaborate on equal footing.

Case Studies and Examples

Consider a fintech startup struggling with compliance testing across multiple jurisdictions. By constructing a mind map that layers regulatory requirements atop core functionality, the QA team visualized gaps between the product and evolving laws. Branches sprouted for currency conversions, KYC workflows, and audit-trail integrity. Testing these nodes uncovered subtle rounding errors in tax calculations—bugs that might have slipped through conventional test suites.

gantt

title E-commerce Seasonal Feature Testing

dateFormat YYYY-MM-DD

section Black Friday

Discount Rules :a1, 2025-11-01, 7d

Flash Sales :a2, after a1 , 5d

Bundled Offers :a3, after a2 , 4dIn another example, an e-commerce platform used mind maps to explore seasonal feature toggles. Branches represented Black Friday deal types—percentage discounts, flash sales, and bundled offers. The visual layout highlighted overlap between discount rules, prompting testers to script combinatorial tests. This approach caught a critical bug where two simultaneous discounts applied double redemption—an error that could have cost millions in revenue.

A more playful case: a game development studio employed mind maps to manage testing across narrative branches. By associating dialogue options with map nodes, testers could visually traverse story arcs, ensuring that player choices didn’t lead to dead ends. The approach not only improved coverage but also inspired new narrative paths, as QA suggestions merged seamlessly into the design.

Pitfalls and Best Practices

Despite their strengths, mind maps can become unwieldy if overgrown. When branches proliferate unchecked, the map resembles a dense forest with tangled vines. To avoid this, set clear boundaries on depth: limit branch levels to three or four, and archive older maps periodically. Use sub-maps for deep dives, keeping the main map concise and navigable.

Another pitfall: relying too heavily on AI suggestions without human oversight. Generative engines can propose hundreds of nodes, but testers must curate which are relevant. Treat AI as a brainstorming partner, not an oracle. Mark AI-sourced branches distinctly and review them critically during team sessions to ensure they align with product goals and risk profiles.

Finally, guard against tool lock-in. Choose formats (e.g., OPML, FreeMind XML) that allow export and import across platforms. Document your conventions—color codes, icons, node naming schemes—so that new team members can pick up the map without a steep learning curve. Consistency and transparency keep your mind maps alive and useful.

The Role of the QA Engineer

QA engineers are both explorers and storytellers. Mind map–driven testing amplifies this dual role: explorers chart unknown territories, while storytellers weave narratives around features and defects. By packaging test ideas into visual stories, QA professionals communicate risk more effectively to stakeholders, bridging the gap between technical nuance and business impact.

Skill sets evolve accordingly. Familiarity with diagramming tools becomes as essential as writing automation scripts. Soft skills—facilitating workshops, guiding group brainstorming, and mediating divergent opinions—take center stage. My British lilac cat, ever the insistence on attention, reminds me that charisma and a touch of humor can transform dry test discussions into vibrant creative sessions.

Looking ahead, QA certifications may include mind mapping competencies. Imagine badge-driven programs where engineers demonstrate proficiency in organizing complex test scenarios, integrating AI suggestions, and exporting maps into automation pipelines. Such recognition would formalize what many of us already practice: blending artistry with engineering rigor.

Conclusion

Mind map–driven testing revitalizes exploratory QA by offering a flexible, visual, and collaborative framework. From the humble pen-and-paper sketch to AI-powered generative suggestions, the technique scales across team sizes and domains. Charting your software’s quality terrain becomes less of a chore and more of a creative expedition—complete with unexpected detours and feline endorsements.

As tools evolve and AI takes a more proactive role, the core value of mind mapping remains human ingenuity. No algorithm can fully replace the spark of insight that arises when a tester ponders an edge case or when Mr. Whiskers bats at a stray node. By marrying structure with spontaneity, mind map–driven testing ensures that every stone is unturned, every branch explored, and every bug given its moment in the spotlight.

In the end, software quality is a story we write together—one branch at a time. Whether you’re a seasoned QA manager or a curious newcomer, grab your virtual markers and start drawing. The map awaits, and who knows what marvels lie hidden among its winding paths?